Google’s SIMA 2 agent uses Gemini to reason and act in virtual world

Google DeepMind has introduced a research preview of SIMA 2, the latest evolution of its generalist AI agent, which incorporates the advanced language and reasoning capabilities of Gemini, Google’s large language model. Unlike its predecessor, SIMA 2 moves beyond just following instructions to truly understanding and interacting dynamically with its environment. The original SIMA was trained extensively on video game footage, enabling it to operate in various 3D games with some degree of human-like skill, though it struggled with complex tasks, achieving only a 31% success rate compared to 71% for humans.

According to Joe Marino, senior research scientist at DeepMind, SIMA 2 represents a significant leap forward in functionality. This new agent demonstrates increased versatility as it can successfully complete complex tasks in unfamiliar environments and improve itself based on its own experiences. This self-improving aspect signals progress toward more adaptable general-purpose robots and artificial general intelligence systems, characterized by their ability to learn new skills and apply knowledge across diverse contexts.

DeepMind highlights the importance of “embodied agents”—AI systems that interact with their surroundings physically or virtually, akin to robots or humans—contrasting them with agents limited to virtual or data interactions such as managing calendars or executing code. Jane Wang, senior staff research scientist at DeepMind, emphasized that SIMA 2’s capabilities extend well beyond gaming, requiring it to comprehend user instructions and respond with genuine common sense reasoning.

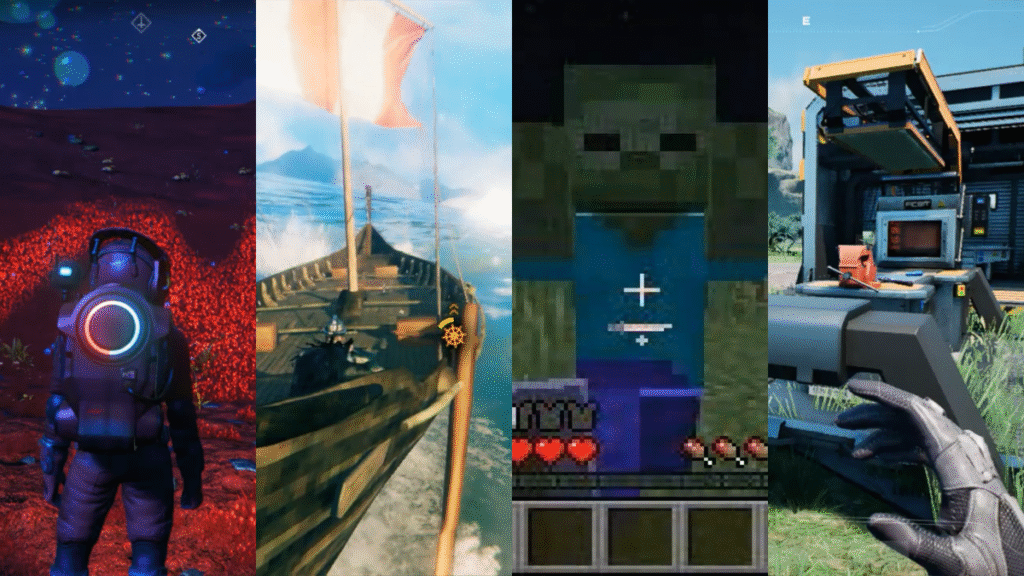

By integrating the Gemini 2.5 flash-lite model, SIMA 2 has doubled the performance of the original version. This combination blends Gemini’s sophisticated language and reasoning faculties with SIMA’s learned embodied skills. Demonstrations included the agent navigating and interpreting environments in games like “No Man’s Sky,” where it assessed surroundings and decided next actions such as responding to distress signals. SIMA 2 also internally reasons through tasks, evidenced when it logically identified the color “ripe tomato” as red to complete a directional request and can even interpret emoji-based commands like chopping down a tree.

Moreover, SIMA 2 can operate within photorealistic worlds generated by DeepMind’s Genie world model, accurately identifying and interacting with objects like benches, trees, and butterflies. It also benefits from Gemini-enabled self-improvement that requires minimal human data. While its predecessor relied wholly on human gameplay data, SIMA 2 uses that as a starting point, then generates its own tasks and performance evaluations through separate Gemini models, allowing it to learn and optimize its behaviors independently through trial and error, guided by AI feedback rather than human intervention.

DeepMind treats SIMA 2 as an important step toward developing more general-purpose robots. Frederic Besse, senior staff research engineer, noted the critical components for real-world robotic task performance: high-level understanding of the environment and the reasoning needed to act accordingly. For example, a robot tasked with checking pantry contents must comprehend concepts like “beans” and “cupboard,” navigate there, and then execute subtasks. SIMA 2 primarily addresses these high-level cognitive functions, while control of fine motor actions remains an area still under development.

The team has not provided a timeline for deploying SIMA 2 within physical robotic systems, clarifying that their recent robotics foundation models have been developed separately with different training methods. Although the preview of SIMA 2 currently serves to showcase DeepMind’s advances, the intention is to explore collaborative opportunities and possible applications across various fields moving forward.